Did you know that researchers are mostly tackling AI’s ethical issues like bias and privacy? They’re not just looking at long-term risks of advanced AI systems. This shows the big gap between AI ethics and AI safety. Both are key to making AI responsible.

AI is getting more advanced fast. So, we must deal with both current ethical issues and future risks. In this article, we’ll look at the main differences between AI ethics and AI safety. We’ll see the unique challenges and what’s important in each area.

Key Takeaways

- AI ethics deals with today’s ethical problems, like bias and privacy in AI.

- AI safety looks at the big risks of advanced AI, like unintended effects as AI gets smarter.

- AI ethicists and safety experts often work on the same issues but have different priorities and views.

- There’s a lot of debate in the AI world because people don’t fully get AI. Researchers face safety concerns and different priorities.

- We need to work together to close the gap between AI ethics and safety. This ensures we tackle AI’s fast growth challenges well.

Understanding AI Ethics and AI Safety

Artificial intelligence (AI) is growing fast, and we’re focusing more on making these technologies ethical and responsible. AI ethics are rules and values that guide how we design, use, and deploy AI systems. The main goal is to tackle issues like bias, privacy problems, and other harms from AI.

AI Ethics: Addressing Current Ethical Concerns

Ethical thoughts in AI are important at every step of making an AI project. Everyone on the team needs to work together to make sure AI is used right. We need a special way to handle ethical issues, as some AI projects have more challenges than others.

Creating ethical AI starts with a culture of responsible innovation. We need a strong governance structure that makes sure AI is fair, safe, and ethical. This means using frameworks like “SUM Values” and “FAST Track Principles” to guide us.

Following these principles helps AI developers make systems that are fair, accountable, sustainable, and transparent. This means using unbiased data, preventing discrimination, and making sure there’s a clear chain of responsibility. It also means being able to check how the system works from start to finish.

AI safety looks at the big risks of advanced AI, like systems doing things we don’t want them to do. It’s important to think about both ethics and safety to move AI forward responsibly.

| Ethical AI | Responsible AI |

|---|---|

| Ensures AI systems follow moral and social values | Focuses on the wider societal impact of AI |

| Engages with bias and fairness concerns | Emphasizes transparency and accountability |

The Rise of AI and Ethical Considerations

Artificial intelligence (AI) has changed many fields, like fintech, healthcare, and e-commerce. But, this growth has also brought up big ethical questions. We need to think about the right way to make and use AI.

AI might copy our biases, which could be a big problem. Without careful checks, AI could make unfair decisions in lending, jobs, and justice. This could lead to unfair treatment and go against fairness and equality.

There’s also worry about AI taking away our privacy and freedom. With AI looking at our personal info, we need strong rules to protect our data and be clear about how it’s used.

AI can also cause problems we didn’t plan for. If AI doesn’t work right or is made badly, it could hurt people. This shows we must make AI safely and think about its ethical sides.

“The rise of AI has brought immense benefits, but it has also raised critical ethical concerns that must be addressed. As we continue to harness the power of this technology, we must do so with a deep commitment to ethical principles and the well-being of all.”

We need a careful way to make and use AI. This means adding ethical rules to AI design and making clear rules for using this tech right.

By thinking ahead on ethical issues, we can make the most of AI. This will help make sure AI helps everyone, not just a few. As AI grows, we all have to help shape it. We should make sure it fits with our ethical values and protects everyone’s well-being.

Challenges in Implementing Responsible AI

As AI becomes more common, the need for responsible AI grows. But, making AI responsible is hard because of a lack of clear rules and guidance.

There’s no worldwide agreement on how to make and use AI systems. This makes it hard to keep AI safe and ethical. New AI tools like GPT-4 Turbo and AI diagnostic tools come out without anyone checking if they’re safe or ethical. This leaves a big gap in oversight.

Lack of Clear Guidance and Regulation

AI systems are complex, making it tough to use them responsibly. Finding bias, being accountable, and keeping users safe and private is hard. Without clear rules, companies and developers find it hard to follow AI’s ethical rules. This often leads to bad outcomes.

| Key Challenge | Description |

|---|---|

| Lack of Clear Guidance | The absence of globally accepted standards and frameworks for responsible ai makes it difficult to ensure safety and ethical compliance. |

| Complexity of AI Systems | The inherent complexity of ai systems makes it challenging to detect bias, guarantee accountability, and ensure user privacy and safety. |

| Diverse Array of New AI Instruments | The emergence of new ai instruments, such as GPT-4 Turbo and AI diagnostic tools, without any regulatory oversight raises concerns about their safety and ethics. |

It’s important to overcome these challenges to make the most of AI safely. We need to work on giving clear guidance and rules for responsible ai.

AI Ethics vs AI Safety: Key Differences Explained

As AI grows in power, it’s key to know the difference between AI ethics and AI safety. Both aim to make sure AI is used right, but they focus on different parts of the issue.

AI ethics deals with the big ethical issues like bias, unfairness, and privacy. It uses moral rules and values to make sure AI is good for society and people. It creates rules and best practices for making and using AI.

AI safety looks at the big risks of advanced AI, like bad outcomes and goals that don’t match ours. It’s about making sure AI works for humanity’s benefit. This means making sure AI has goals that are good for us.

| AI Ethics | AI Safety |

|---|---|

| Addresses current ethical concerns, such as bias, discrimination, and privacy issues. | Focuses on long-term risks, including unintended consequences and misaligned goals that could threaten humanity. |

| Applies moral principles and values to ensure AI systems align with societal norms and human well-being. | Emphasizes the importance of value alignment, where AI systems reliably pursue goals beneficial to humanity. |

| Develops guidelines, frameworks, and best practices to guide responsible AI development and deployment. | Explores methods to ensure the safe and reliable development of advanced AI systems. |

AI ethics and AI safety have different main goals but work together for responsible AI. They tackle both immediate ethical issues and long-term risks. This way, AI can help and empower people in the future.

“The greatest challenge in building safe and ethical AI systems is ensuring that the systems behave in ways that are consistent with human values and intentions.”

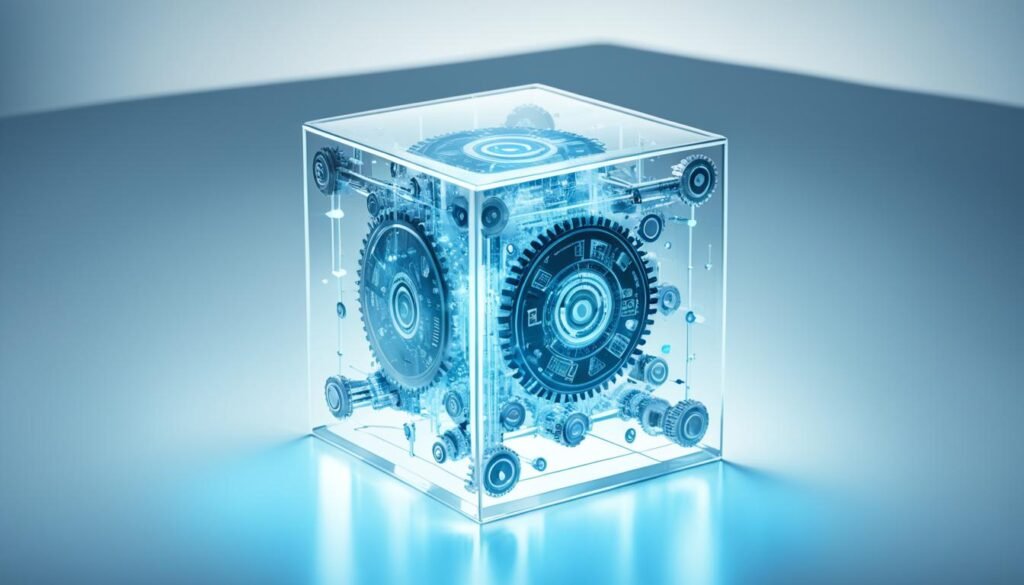

The Complexity of AI Systems

Artificial intelligence (AI) is getting more complex as it advances. These systems are very complex, making it hard for humans to understand or check their results. This makes it tough to ensure AI is safe and ethical.

It’s hard to see why AI makes certain decisions, which makes it hard to spot bias or privacy issues. We need to understand how AI works to make sure it’s responsible. This is key for developing AI that respects our values.

AI’s complexity brings unique challenges for responsible ai, ai safety, and ai ethics. Figuring out how AI works is hard, needing experts from many fields. It’s a big task that requires computer science, social sciences, and policy expertise.

| Position | Description |

|---|---|

| Advanced AI Poses A Global Catastrophic Existential Risk (AIX) | This stance emphasizes the potential for advanced AI to pose existential threats on a global scale, highlighting the need for robust safety measures and oversight. |

| AI Is (What We Don’t Like About) Capitalism (AIC) | This perspective focuses on the socioeconomic impacts of AI, arguing that it exacerbates inequalities and challenges the autonomy of individuals and communities. |

| AI Offends Liberal Values (AIL) | This position raises concerns about the threats posed by AI to liberal democratic processes, including issues related to misinformation, manipulation, and inegalitarianism. |

As AI gets more complex, we need strong responsible ai rules and ai safety measures. It’s important to bring together experts from different fields. This helps tackle the challenges AI brings.

“The debate surrounding AI safety and ethics often revolves around the prioritization of these views (AIX, AIC, AIL) in terms of research focus, resource allocation, and political attention.”

Fixing AI’s complexity is a big task that touches on many areas. It’s not just a tech issue but also affects society, the economy, and politics. By working together across disciplines, we can make AI that’s advanced and ethical.

Regulatory Efforts and Global Initiatives

As concerns about ai regulation, ai governance, and ai legislation grow, governments and groups are working hard. They aim to create rules and global efforts for responsible ai and ethical ai. These steps are to make sure AI technology is safe and used right.

AI Governance and Legislation

In recent years, more AI-related laws have been made. Countries want to set rules for how to manage and govern AI. The UK has started the AI Safety Body to make guidelines for AI risks.

The European Union has also acted, with the EU’s AI Act. This law has rules for making, using, and deploying AI in the EU. Other places like China, Saudi Arabia, the UAE, and Brazil are making their own rules for AI.

These rules are important for the future of AI. They make sure AI is made and used in an ethical way. By having clear rules, everyone can work together to make AI better. This helps avoid risks and challenges.

Working together across the world is key to these rules. The Bletchley Declaration was signed by 29 countries at the 2023 World Summit on AI Safety in the UK. The G7 nations, OECD, and GPAI also support global AI standards. This shows a big effort to make AI safe and sustainable.

As AI changes, these rules and efforts will be crucial. They will help make sure AI brings benefits without harming people or society.

Balancing Innovation and Ethical Considerations

The world of AI innovation is growing fast. Companies must balance their drive for new tech with ethical AI values. This balance is key to making sure new AI development doesn’t harm people or society.

Leaders and policymakers are now focusing on responsible AI. They’re setting up rules and guidelines for AI accountability. These rules help make sure AI is fair, clear, and trustworthy.

Putting people first is a big part of this. It means thinking about what users need and want, not just about tech progress. Working together and listening to different voices helps make AI innovation that fits with what society values and builds trust.

Setting up global AI governance rules is also key. It helps deal with the challenges of AI development across borders. This way, ethical AI standards are the same everywhere, preventing problems that could hurt the future of AI.

As AI innovation keeps growing, keeping a balance between progress and responsibility is crucial. By focusing on ethical AI, companies can make the most of AI development. This way, they protect people and society while moving forward.

“The pursuit of innovation should never come at the expense of upholding fundamental ethical principles. Responsible AI development is the key to unlocking the boundless potential of this transformative technology.”

The Role of Transparency and Explainability

As AI becomes more common in our lives, ai transparency and ai explainability are key. They help make responsible ai and ethical ai that is accountable.

Modern AI models, like large language models, are hard to understand. This makes it tough to see how they work and make decisions. Without clear explanations, people worry about bias and unfairness.

People like policymakers, researchers, and leaders are working to make AI clearer. Laws like the GDPR and AI Act push for more openness and responsibility in AI.

A study by Zendesk shows how important this is. 65% of CX leaders see AI as crucial, and 75% worry that unclear AI could lose customers.

Finding the right balance in AI is hard. We can’t always show everything, but trying to make AI clearer helps build trust. This is key for ethical AI.

By focusing on ai transparency and ai explainability, we can make the most of responsible ai and ethical ai. This leads to better results for everyone.

| Statistic | Relevance |

|---|---|

| 65 percent of CX leaders see AI as a strategic necessity | Highlights the importance of AI transparency in customer experience |

| 75 percent of businesses believe that a lack of transparency in AI could lead to increased customer churn | Emphasizes the need for transparency in AI systems to build trust and retain customers |

| Regulations such as GDPR, OECD AI Principles, and EU Artificial Intelligence Act aim to ensure transparency in AI systems | Demonstrates the growing regulatory efforts to address the challenges of AI transparency and accountability |

Future Directions and Ethical Frameworks

The future of AI is bringing up big questions about ethical AI frameworks. Experts, lawmakers, and business leaders are working hard to create rules and standards for AI systems. They aim to make sure AI is used in a responsible way.

Many are worried about the lack of clear rules for AI. A survey by Conversica found that 86% of companies using AI think we need better guidelines. But only 6% have made their own responsible AI rules. This shows we need a strong, shared effort to make AI safe and ethical.

AI is making big decisions in important areas like hiring, health care, and justice. For example, the COMPAS system gave Black defendants a 45% higher risk score than white ones, even if they reoffended at the same rate.

“The advancement of AI automation has the potential to replace human jobs, but AI also has the potential to create far more jobs than it destroys, according to some arguments.”

To fix these issues, experts are looking at ethical AI frameworks. These focus on being open, clear, and accountable. Companies are trying to make sure AI works for everyone and does good, not harm.

As AI changes the world, we must work together to make sure it’s safe and ethical. By creating strong ethical rules, we can make sure AI helps us all without hurting anyone.

| Key Insights | Statistics |

|---|---|

| Lack of clear guidance and regulation on AI use | 86% of organizations using AI see the need for clearly stated guidelines, but only 6% have established guidelines for responsible AI |

| Bias in AI-powered decision making | COMPAS database showed a bias favoring higher risk scores for Black defendants by 45% compared to white defendants, despite equal reoffend rates |

| Potential impact of AI automation on jobs | AI has the potential to both replace and create human jobs |

Conclusion

AI is getting more common in our lives, making it crucial to balance innovation with ethics. This article looked into the key differences between AI ethics and AI safety. It showed we need a wide-ranging approach to make AI responsible.

AI ethics deals with today’s ethical issues like fairness and transparency. AI safety looks at the long-term risks and bad outcomes of advanced AI. We must focus on making AI systems strong, clear, and aligned with what humans want to avoid harm.

There are big challenges in making AI responsible, like a lack of rules and AI’s complex nature. We need a team effort from everyone involved. Groups that make rules, global projects, and ethical guides must work together. They should set standards that help us use AI safely and wisely.

FAQ

What is the difference between AI ethics and AI safety?

AI ethics deals with current ethical issues like bias and privacy. It uses moral principles to address these problems. AI safety looks at the long-term risks of advanced AI, like unintended harm and misaligned goals.

What are the key ethical considerations in the rise of AI technology?

AI raises concerns about bias, rights violations, and unintended harm. It’s important to develop and use AI responsibly to address these issues.

What are the challenges in implementing responsible AI practices?

Challenges include a lack of clear rules and the complexity of AI systems. This makes it hard to spot bias, ensure accountability, and protect privacy and safety.

How do AI ethics and AI safety differ in their priorities and approaches?

AI ethics focuses on current ethical issues. AI safety looks at long-term risks of advanced AI. These two areas have different goals in guiding AI development.

How is the complexity of AI systems a challenge for ensuring their safety and ethical integrity?

AI systems’ complexity makes it hard for humans to understand or check their results. This complexity is a big challenge in making AI safe and ethical.

What regulatory efforts and global initiatives are shaping the responsible development of AI?

Governments and groups are creating rules and initiatives for AI. This includes laws, the AI Safety Body in the UK, and the EU’s AI Act. These efforts help guide AI’s responsible use.

How can organizations and policymakers balance innovation and ethical considerations in AI development?

It’s important to balance innovation with ethics. This can be done by using AI ethics guidelines and safety techniques. These help design fair, safe, and ethical AI.

Why is transparency and explainability important for responsible AI development?

Transparency and explainability help address bias and accountability issues. But, modern AI’s complexity makes it hard to be transparent and explainable. This is key to building trust and following ethical principles.

What are the future directions for developing robust ethical frameworks for AI?

Researchers, policymakers, and leaders are creating guidelines and standards for AI. A joint effort from various groups is needed to put ethical and safety first in AI innovation.